Avatar viewpoint placement

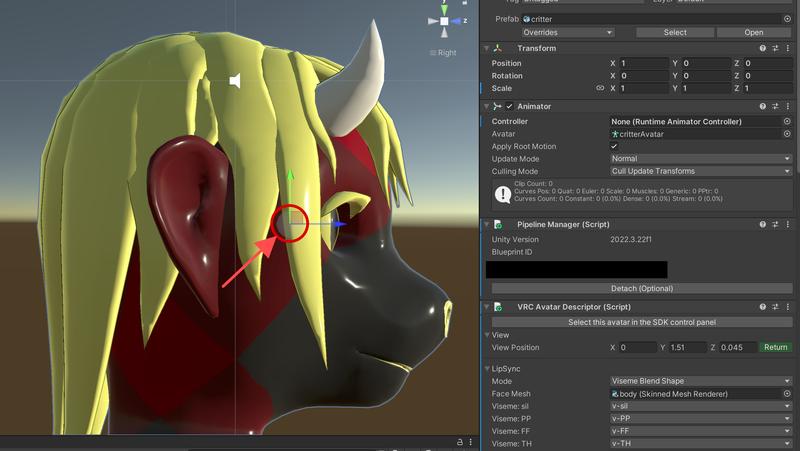

One of the most poorly-defined yet critical things in configuring a VR avatar is where exactly to place the viewpoint. It can have a lot of impact on your viewing experience, especially with how the viewpoint ends up relating to the rest of the body. This becomes especially critical in systems like VRChat and Resonite where the physics of the body are directly impacted by this placement, and especially now that VRChat has built-in functionality for allowing a first-person view of your facial features (which the critter avatar calls “first-person snooter”).

When building a humanoid avatar that has proportions that more or less match one’s physical body, you generally want the viewpoint to be situated such that it’s placed at the same relative depth from your real-life view origin compared to your shoulders, as this means that your virtual head will be tracked with the same relative offset from your virtual shoulders as they are from your real ones. Otherwise, your virtual arms will seem to be the wrong length when fully extended.

But this raises the obvious question: where’s your physical view origin?

Finding the real-life view origin

In computer graphics, the camera’s origin is perfectly well-defined. It’s a single infinitely-small point, from which all lines of sight radiate out perfectly straight. Which is to say, it’s incredibly idealized in terms of the lines of sight. It’s pretty close to how things work in real life, but in real life the lines of sight are a bit fuzzy due to focus and depth of field. But it’s close enough to reality for our purposes.

In VR, the final view origin ends up being pretty much identical to where your physical eye’s is. It’s not quite perfect and there’s a lot of wiggle room due to different facial interfaces and lens optics and so on, but those factors end up canceling out more or less and even if the camera origin doesn’t quite match your eye’s origin, you end up with a sort of virtual “reprojection” where at least basic viewpoints work out roughly the same. (If the reprojection point is too far off you start to get weird swimminess which can cause vertigo and disorientation, so it’s in the VR headset manufacturers' best interest to try to make the focal point of the display match as closely as possible to the focal point of your eye.)

In rendering we often consider the viewpoint itself to be a frustum, basically a truncated pyramid where the edges of the sides all converge directly on the centerpoint of the view. And indeed this is a perfect reflection of how lines of sight work; if you have a flat shape that’s perpendicular to the view, and then an identical flat shape that’s twice as large, it will perfectly line up with the smaller shape if the viewpoint is right where their edges converge, at least on average.

But where does this happen in the eye? I figured that it would be at its focal point — by definition, the center of the retina — but I couldn’t be sure of this. Maybe it was at the center of the eye, where the focal lines converge? Maybe it’s even at the pupil? Maybe due to the refraction within the eye it’s actually somewhere else entirely?

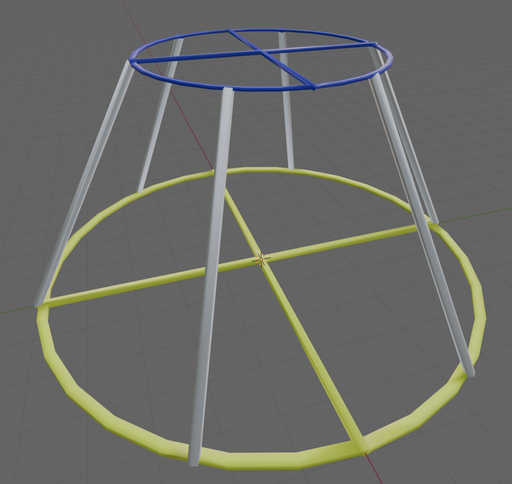

In thinking about this I started to design a little calibraton frustum object that I could 3D print to try to scientifically determine where the center of my viewpoint is…

…until I realized that all I really needed was some sort of truncated conical section with straight edges. And I have a whole bunch of those already!

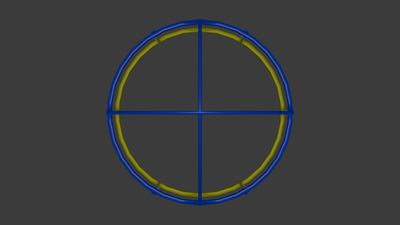

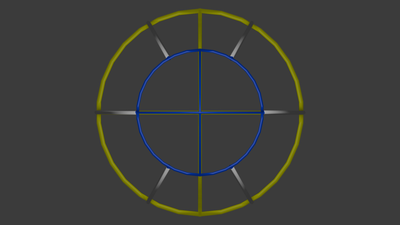

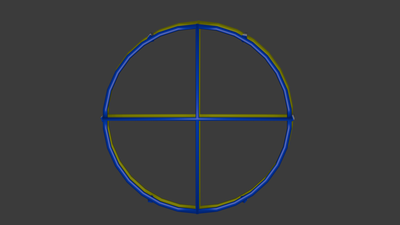

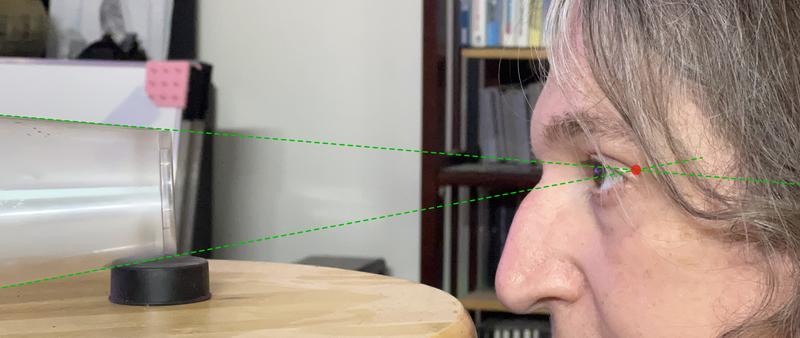

I tried cutting the bottom out of a white cup and seeing if I could orient things perfectly in a photo, but then realized I’d be much better off using a larger clear one. After a few experiments I determined that the best thing to do would be to prop a cup on its side, arrange the camera so that it’s looking right across its flat bottom edge (so that the camera is perpendicular to the cap of the frustum), and then place my head so that my eye closest to the camera is directly where the edges of the frustum converge, so that I could be sure it was centered within the frustum’s projection. Then I was able to trace the edges of the frustum to find roughly where the actual view origin of my eye is:

This isn’t the most precise setup, but I’m pretty confident in saying that the point of convergence works out being pretty much at the center of the eye, contrary to my initial assumption, but it makes sense in retrospect: the convergence point within the eye is also where all lines of sight come together.

There’s probably some work that could be done to be more precise, such as going forward with my initial idea of building a calibrated frustum object, but I’m pretty satisfied with this answer.

If anyone wants to experiment with a more formal calibration process, here’s the frustum I whipped up above, in both OpenSCAD and STL. It’s up to you to figure out how to actually manufacture it accurately, though.

Placing the viewpoint

So, in the case of placing the viewpoint of an avatar, I can think of two schools of thought about how to do it:

- Place it on the back of the avatar’s retina, to get the best representation relative to the rest of its head (especially when first-person snooter is enabled)

- Measure the distance from the back of your eye to the center of your shoulder, and find that same proportional distance on the avatar (using a scale factor I don’t particularly care to try to figure out right now), so that the arms pivot from roughly the same relative spot

In the case of my avatar those points are roughly the same, as I modeled the body rig to more or less match my physical body proportions (aside from my avatar’s head and snout being even more cartoonishly large than my real one1), but for different avatar shapes you’ll have to make different tradeoffs.

Managing body proportions

Pretty much the only objectively correct way of placing your viewpoint from a first-person perspective is to match what the creature you’re embodying sees, and put the viewpoint at the back of the retina. However this doesn’t actually solve the issue for wide swaths of avatar types, and where people get really upset about viewpoint positioning is due to things like hands and arms not working correctly.

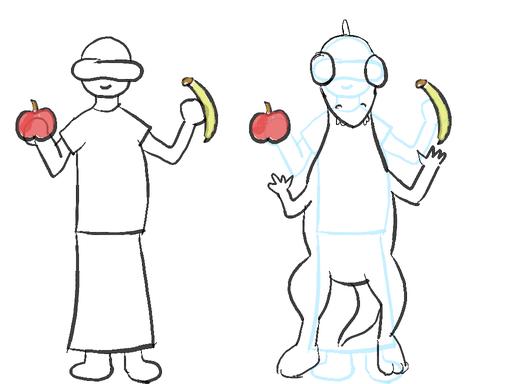

The inherent problem is that the real-life VR equipment is tracking your hands relative to your physical viewpoint (regardless of what tracking technology you’re using), and it can’t just magically change the laws of physics. So, here’s an extreme example demonstrating the problem:

You have a few choices for how to apply a proxy skeleton:

Have your viewpoint match your avatar’s, and have those teeny tiny arms always outstretched trying to match the hand position to your physical ones

Set your viewpoint such that it moves your hands to roughly match where the end of the teeny tiny arms would be (putting it somewhere in the chest) and then have the head move very weirdly when your physical head moves

Make a proxy armature, where your IK skeleton is proportioned relative to your physical body and you use rotation constraints to apply part of its motions to the avatar’s displayed skeleton

The proxy armature is the least-bad approach I’ve seen to doing this, but it still has compromises, depending on whether you proxy the hands or the head.2

Match the head, proxy the hands

If you line up your head/viewpoint and proxy your arms, your avatar’s arms will move proportionally to your physical ones (which is a bit weird from a proprioception standpoint), but also since VRChat uses your physical controller positions to track grabbing, well, interesting things can happen.

It also means that contact-based interactions with your hand are based on the physical hands, meaning that things like headpats and boops don’t work quite how folks would expect them to.

Avi’s yinglet avatar takes a modified version of this approach, by putting the player’s physical hands partway up the forearm and using constraints for the finger movements. This works particularly well owing to the specific way that yinglet proportions mismatch a human’s; if you treat the forearm as being twice as long (rather than thinking about it as a change to the elbow split), the shoulder and elbow remain Close Enough. It still has the problem with grabbed objects basically embedding into the avatar’s forearms, and physics interactions with the hands (such as contact-based boops and headpats) don’t work correctly since the contact points still map to the physical hands, but from a proprioception standpoint it works surprisingly well.

Match the hands, proxy the head

The other way is to line up your hands, and proxy your head’s position in some way. This approach works well from an interaction standpoint, but it hurts immersion, especially for receiving boops and headpats.

The head proxying can happen through armature constraints, but another approach I’ve seen is to ignore the player’s head position entirely and use physics bones to position the head instead. On extreme example of an avatar that does this is Moop’s wooden snake.

Just ignore the problem entirely

Countless avatars take the approach of just treating the avatar like a puppet, where the hands line up with some aspect (usually the hands/paws, but often the head) and then the player’s viewpoint is just a disembodied third-person observer, with the rest of the body positioned using physics or gesture controls. This is especially common for quadrupedal creatures or things like flying noodle dragons.

This has the downside of being necessarily detached, which might not be sufficient for folks who want a sense of embodying their avatar. But for a lot of players this is totally fine, and the wonder of the human mind is that even when operating a puppet, it’s still possible to feel immersed and connected.

In summary

Do whatever works.

Comments

Before commenting, please read the comment policy.

Avatars provided via Libravatar